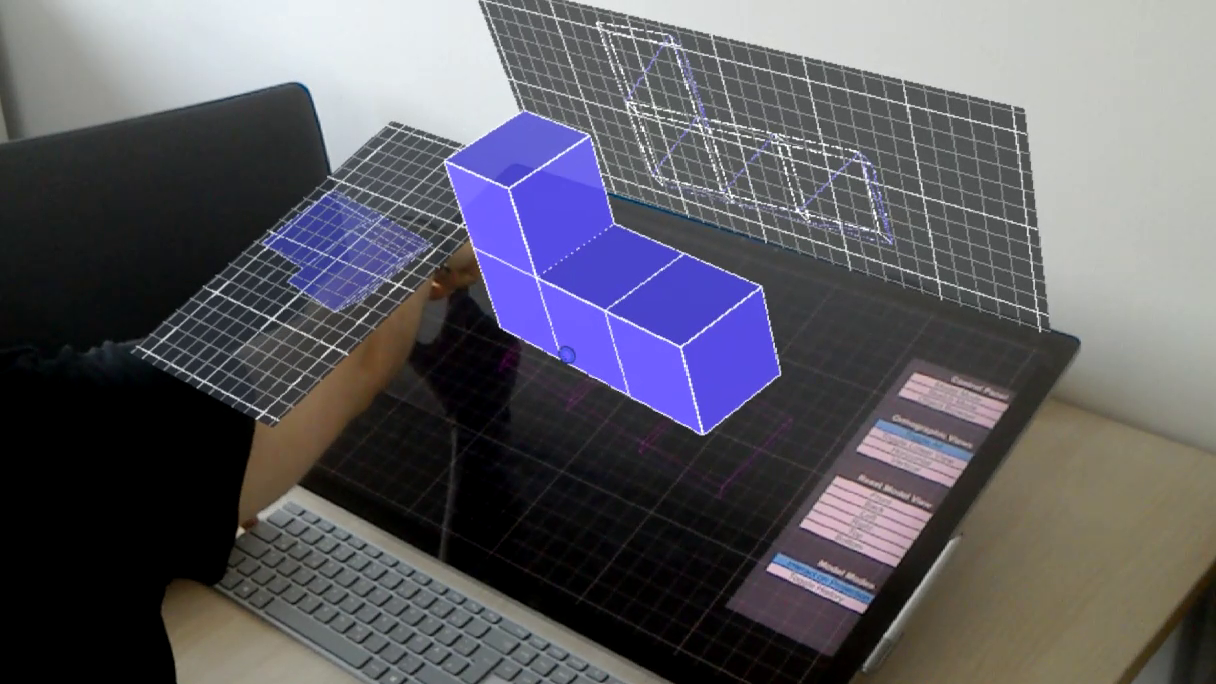

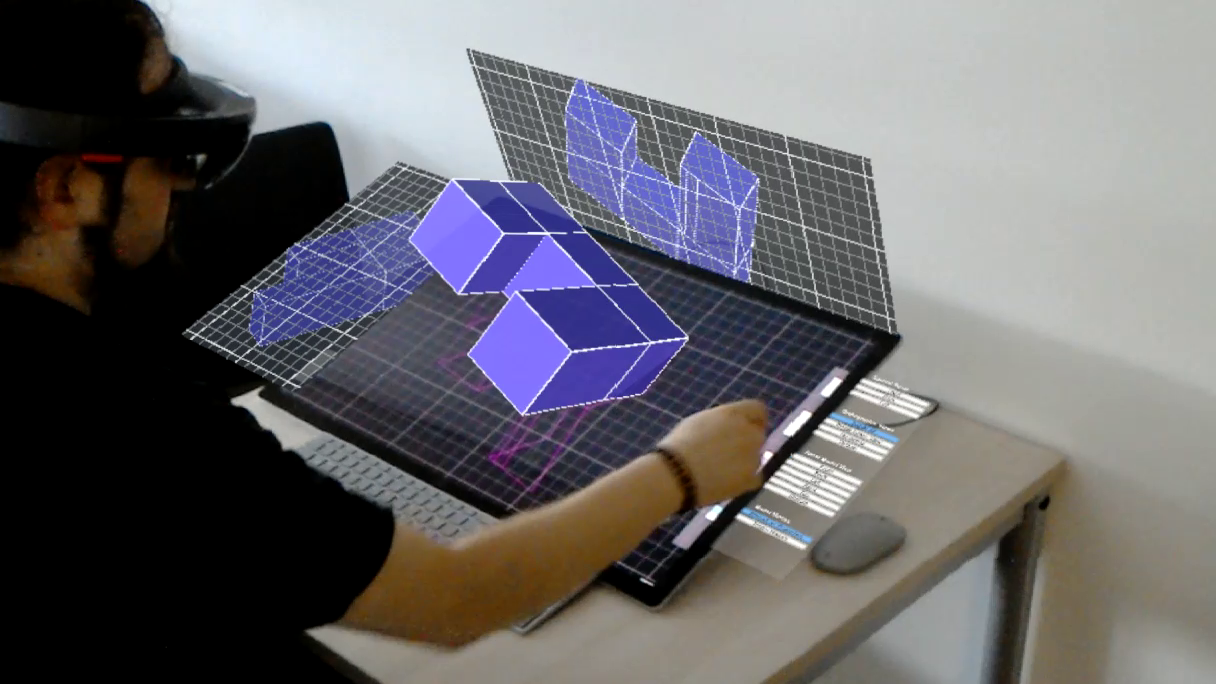

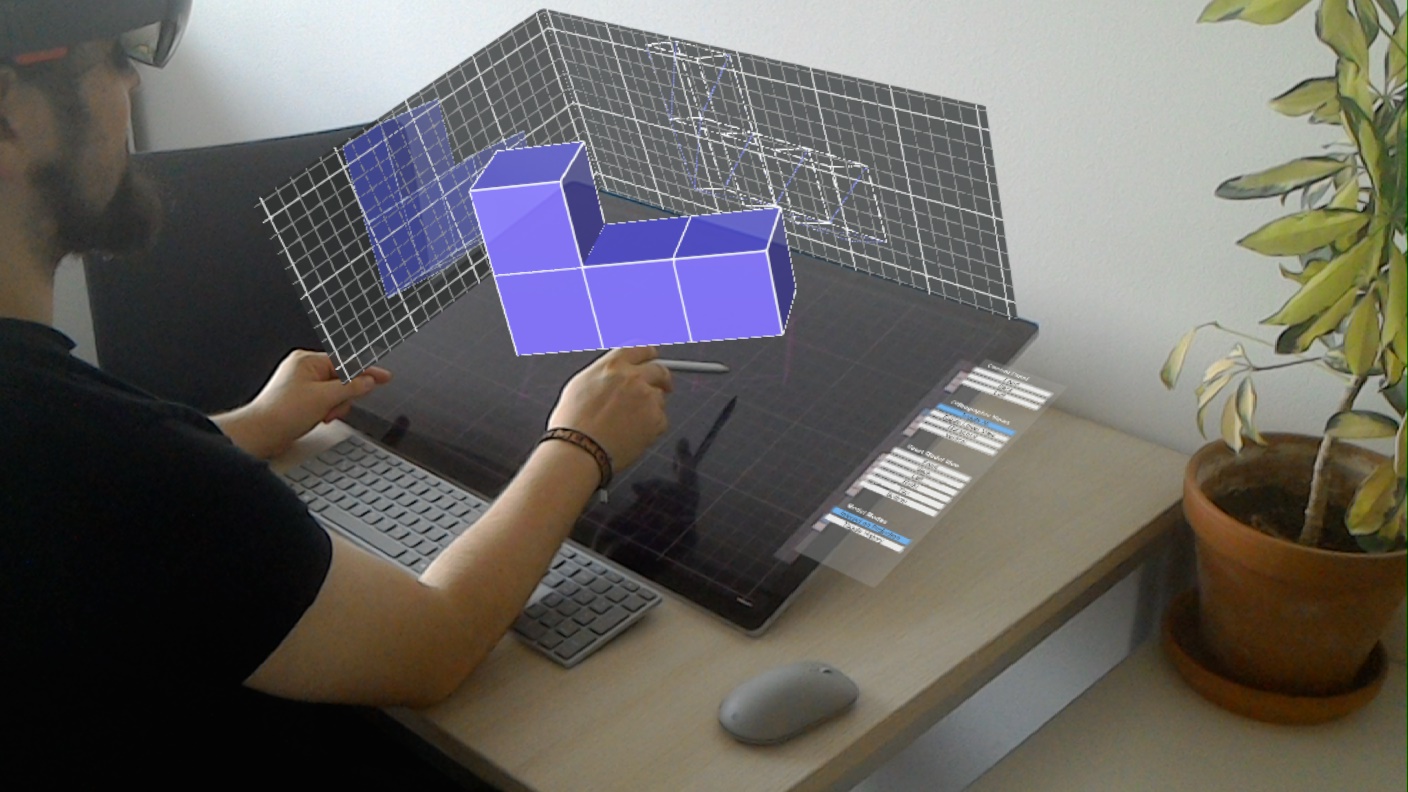

DesignAR is an immersive 3D modeling application which combines a large, interactive design workstation with head-mounted Augmented Reality. Users manipulate the model using touch and pen interaction while to model itself is displayed in stereoscopic Augmented Reality above the display. We also demonstrated how the space beyond the borders of the display can be used to show orthogonal views and offloaded menus. DesignAR was developed at the Interactive Media Lab Dresden and resulted in two publications: A full paper at the ACM ISS 2019, which also won the best paper award, and as a demo at the ACM CHI 2020.

Motivation

The idea of this project was based on the observation that, while development of touch screens and immersive technologies like Augmented Reality (AR) and Virtual Reality (VR) developed rapidly in the last couple of years, most 3D modeling application are still rooted firmly in their desktop roots. DesignAR was the attempt to look into a possible future for 3D apps, displaying the modeled object in stereoscopic 3D situated in the real-world environment, while using natural pen and touch interaction to manipulate the object in a simple and intuitive way. Another aspect was the general idea to combine AR head-mounted displays (HMD) with interactive surfaces to complement each other disadvantages, a combination we called Augmented Displays. While the prototype is of course not a fully fledged modeling application like 3ds max, Maya or Blender, I’m tempted to say we succeeded in enabling our vision of Augmented Displays as well as provide a glimpse at a possible future for 3D modeling applications.

Team

I was the sole developer of this prototype.

Technology

We used a Microsoft Surface Studio as interactive display and a HoloLens as AR HMD. The applications for both devices were developed with the Unity 3D engine. LeanTouch was used as a touch library within Unity. For the communication between both devices a custom client-server solution based on TCP and Open Sound Control (OSC) was developed. Everything was programmed using C#.

Development

Like so often, DesignAR started with the development of a general framework for augmenting displays with arbitrary AR objects. The decision to use Unity was quickly made after attempts to port our earlier MonoGame prototype to the HoloLens failed. Retrospective I would say this was a good decision as Unity brought much useful functionality to the table. The framework I developed originally helped mostly in unifying the coordinate systems of both devices, so that AR objects would be at the right position regarding the display. Over time it grew together with the rest of the prototype to support touch and pen interaction (building upon LeanTouch), offer easy network integration, custom shaders, and much more.

Network

One of the goal was always to integrate both HoloLens and Display in a way that users would not notice they are working with two independent systems. One critical part to achieve this was a reliable, low-latency network solution. I decided to build a client-server architecture to be able to easily support an arbitrary number of displays and HMDs later on. To avoid maintaining different code bases and be able to establish connections directly out of the Unity editor, I tried to develop a common code base that works for Win32 (server, display, Unity) as well as UWP (HoloLens) and succeeded using simple TCP sockets. To add a little more structure, Open Sound Control is used as transmission protocol. On top is a custom messaging system where clients can register at the server, subscribe to events of other clients, get notified when clients join or leave, and so on. Over the course of the project, this network architecture grew very robust and reliable so that network issues were rarely ever the case.

Modeling

Another big issue was the modeling functionality itself. I must confess that I underestimated the complexity of this issue regarding the modeling data itself as well as DesignAR‘s special setup. In the end, I build a logic representation of the model with vertices, edges and polygons, to which all the modeling functions are applied to and which is than transformed into a triangular mesh for rendering. Because the model is floating in front of the user above the display, using touch and pen to manipulate it proved challenging as well. When a user starts modeling, e.g., by drawing a line with the pen, a plane is fitted between the start and end point of the line, the current position of the users head to calculate the intersections with the logic representation of the model. Depending on the intersections, new vertices and edges are introduced accordingly. One problem we faced was that users had to reach through the model to reach the interactive surface, which lead to perception issues. To solve this, the model is projected onto the 2D surface using the users current head position for the correct perspective as soon as a modeling operation starts. Afterwards, the model is smoothly transferred back into stereoscopic AR again.

Border Views and Interaction

On central aspect we wanted to explore with this prototype was how the borders of the display can be used to display additional AR content. This proved quite easy for a change from a technical point of view. For the offloaded menus, there simply exist two identical copies for the HoloLens and the display which are clipped at the display border. So when the menu slides to the display’s border, it simply moves outside of the camera. Synchronously, the menu on the HoloLens moves accordingly and appears as soon as it reaches the clipping area. For the orthographic wireframe views, additional orthographic cameras were placed in the scene which only render the model (and nothing else) in wire frame mode into a texture. This texture is then applied to a simply Quad and finished is the orthographic AR view. The interaction on the borders is realized by placing colliders at the corresponding positions and handling the recognition of gestures using LeanTouch.

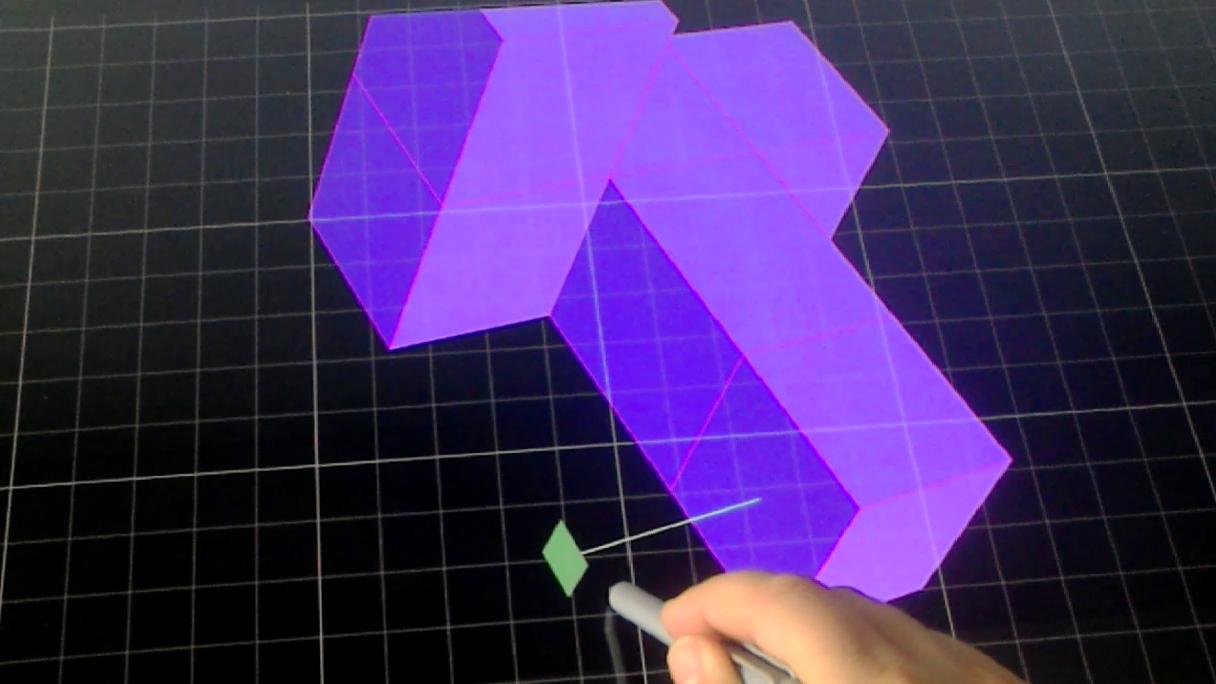

Sketching

One of the earliest ideas for DesignAR was to use the digital pen to sketch a contour which could than be used to create a rotational solid. However, it got pushed aside a little when the other concepts took shape. Ultimately, it was simply to cool to leave out. When a curve is drawn it first gets down-sampled to reduce complexity using a self-developed algorithm. The goal was to keep sharp turns intact in the final curve. Therefore the algorithm looks for extrema to insert points. Each point is than transferred into polar coordinates so that by manipulating the angle, they final points rotate around a common center. The result is triangulated and rendered as a surface model. I also experimented with fitting Bézier splines into the curve which an than be further manipulated. While it did work, it did not make it into the final prototype because it was simply to complex to showcase the simple principle of drawing an object by pen. Instead, we added another example where the contours of an existing object can be sketched which is then extruded to create a 3D model.

3D Instances in AR

Most of DesignAR’s concepts were centered at doing AR in close proximity to the displays. However, we still asked us how to utilize the larger environment surrounding the display. The answer was quite simple: 3D models are often build for a specific purpose and more and more, this purpose is 3D printing. Instead of wasting a lot of material until the model has the desired shape, AR can be used to place a copy of the modeled object directly into AR space, modify it until it fits the requirements, and then print it only once. These AR instances, as we called them, are no longer manipulated using touch interaction because they should be spatially independent from the display. Instead, we used mid-air interaction build into the HoloLens and a custom transformation widget I build which translates and rotates them on all three axis, as well as uniformly scales them. The 3D instances are dynamically updated whenever the model on the display changes. Therefore they are a great way to perceive, how the later model integrates into the environment.

Verdict

DesignAR was a technical difficult project which provided apt challenges in a lot of areas. But in hindsight it was also a very fun project where a lot of new ground was covered at every step. Finding robust, sophisticated solutions for most of these issues proved quite exciting and I can say with confidence that I’m quite proud over the final result.

For the practical applicability of our concepts, AR HMDs still need to advance a lot further than where they are now. Especially the small field of view of the HoloLens is a major problem when sitting close to a large screen. But once these technological issues are resolved, I’m convinced that our approach could really improve existing modelling applications.