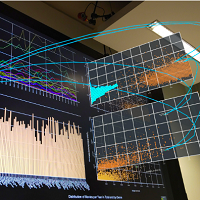

AvatAR is an Augmented Reality visualization and analysis environment which uses a combination of virtual human avatars and 3D trajectories, as well as additional techniques, to visualize human motion data with great detail. Interactions can be manipulated using either gesture interaction or through an accompanying tablet, which also acts as an overview device. AvatAR was developed at Autodesk Research and was published as a full paper, as well as presented at the ACM Conference on Human Factors in Computing Systems 2022 (CHI’22).